The following provides a collection of information and resources associated with a paper and presentation given at ALASI 2017 – the Australian Learning Analytics Summer Institute in Brisbane on 30 November, 2017. Below you’ll find an abstract, a recording of a version of the presentation, the presentation slides and the references.

The paper examines the DIY development and use of a particular application of learning analytics (known as Know thy student) within a single course during 2015 and 2016. The paper argues that given limitations about what is known about the institutional implementation of learning analytics that examining teacher DIY learning analytics can reveal some interesting insights. The paper identifies three implications and three questions.

Three implications

- Institutional learning analytics currently falls short of an important goal.

If the goal of learning analytics is that “of getting key information to a human being who can use it” (Baker, 2016, p. 607) then institutional learning analytics is falling short, and not just at a specific institution.

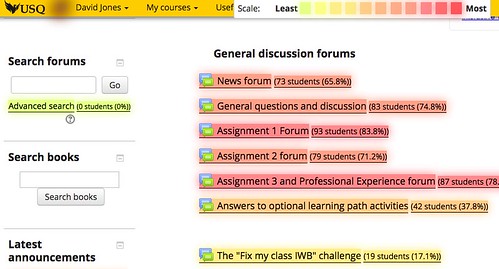

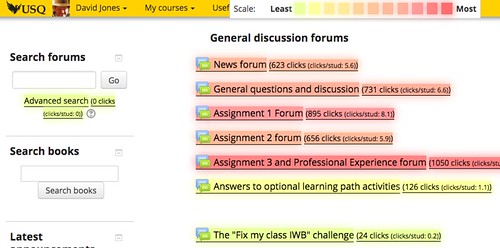

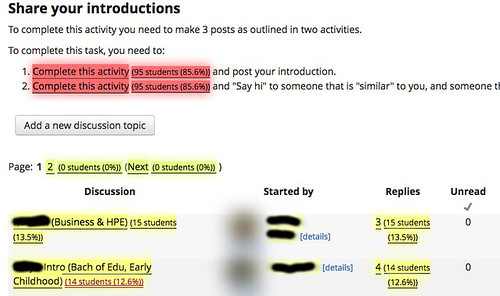

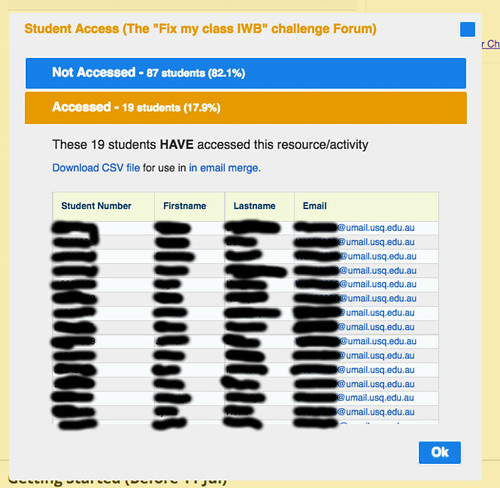

- Embedded, ubiquitous, contextual learning analytics encourages greater use and enables emergent practice.

This case suggests that learning analytics interventions designed to provide useful contextual data appropriately embedded ubiquitously throughout the learning environment can enable significant levels of usage, including usage that was unplanned, emerged from experience, and changed practice.

In this case, Know thy student was used by the teacher on 666 different days (~91% of the days that the tool was available) to find out more about ~90% of the enrolled students. Graphical representations below.

- Teacher DIY learning analytics is possible.

Know thy student was implemented by a single academic using a laptop, widely available software (including some coding), and existing institutional data sources.

Three questions

- Does institutional learning analytics have an incomplete focus?

Research and practice around the institutional implementation of learning analytics tends to appear to have a focus on “at scale”. Learning analytics that can be used across multiple courses or an entire institution. That focus appears to be at the expense of course or learning design specific, which appear to be more useful.

- Does the institutional implementation of learning analytics have an indefinite postponement problem?

Aspects of Know thy student are specific to the particular learning design within a single course. The implementation of such a specific requirement would appear unlikely to have ever been undertaken by existing institutional learning analytics implementation. It would have been indefinitely postponed.

- If and how do we enable teacher DIY learning analytics?

This case suggests that teacher DIY learning analytics is possible and potentially overcomes limitations in current institutional implementation of learning analytics. However, it’s also not without its challenges and limitations. Should institutions support teacher DIY learning analytics? How might that be done?

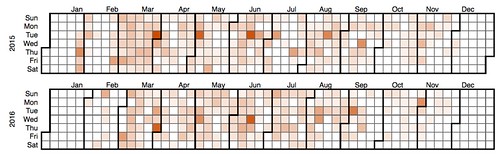

Usage

The following heat map shows the number of times Know thy student was used on each day during 2015 and 2016.

The following bar graph contains 761 “bars”. Each bar represents a unique student enrolled in this course. The size of the bar shows the number of times Know thy student was used for that particular student. (One student was obviously used for testing purposes during the development of the tool)

Abstract

The paper on which it is based has the following abstract.

Learning analytics promises to provide insights that can help improve the quality of learning experiences. Since the late 2000s it has inspired significant investments in time and resources by researchers and institutions to identify and implement successful applications of learning analytics. However, there is limited evidence of successful at scale implementation, somewhat limited empirical research investigating the deployment of learning analytics, and subsequently concerns about the insight that guides the institutional implementation of learning analytics. This paper describes and examines the rationale, implementation and use of a single example of teacher do-it-yourself (DIY) learning analytics to add a different perspective. It identifies three implications and three questions about the institutional implementation of learning analytics that appear to generate interesting research questions for further investigation.

Presentation recording

The following is a recording of a talk given at CQUni a couple of weeks after ALASI. It uses the same slides as the original ALASI presentation, however, without a time limit the description is a little expanded.

Slides

Also view and download here.

References

Baker, R. (2016). Stupid Tutoring Systems, Intelligent Humans. International Journal of Artificial Intelligence in Education, 26(2), 600–614. https://doi.org/10.1007/s40593-016-0105-0

Behrens, S. (2009). Shadow systems: the good, the bad and the ugly. Communications of the ACM, 52(2), 124–129.

Colvin, C., Dawson, S., Wade, A., & Gašević, D. (2017). Addressing the Challenges of Institutional Adoption. In C. Lang, G. Siemens, A. F. Wise, & D. Gaševic (Eds.), The Handbook of Learning Analytics (1st ed., pp. 281–289). Alberta, Canada: Society for Learning Analytics Research (SoLAR).

Corrin, L., Kennedy, G., & Mulder, R. (2013). Enhancing learning analytics by understanding the needs of teachers. In Electric Dreams. Proceedings ascilite 2013 (pp. 201–205).

Díaz, O., & Arellano, C. (2015). The Augmented Web: Rationales, Opportunities, and Challenges on Browser-Side Transcoding. ACM Trans. Web, 9(2), 8:1–8:30. https://doi.org/10.1145/2735633

Dron, J. (2014). Ten Principles for Effective Tinkering (pp. 505–513). Presented at the E-Learn: World Conference on E-Learning in Corporate, Government, Healthcare, and Higher Education, Association for the Advancement of Computing in Education (AACE).

Ferguson, R. (2014). Learning analytics FAQs. Education. Retrieved from https://www.slideshare.net/R3beccaF/learning-analytics-fa-qs

Gašević, D., Dawson, S., Rogers, T., & Gasevic, D. (2015). Learning analytics should not promote one size fits all: The effects of instructional conditions in predicating learning success. The Internet and Higher Education, 28, 68–84. https://doi.org/10.1016/j.iheduc.2015.10.002

Germonprez, M., Hovorka, D., & Collopy, F. (2007). A theory of tailorable technology design. Journal of the Association of Information Systems, 8(6), 351–367.

Grover, S., & Pea, R. (2013). Computational Thinking in K-12: A Review of the State of the Field. Educational Researcher, 42(1), 38–43. https://doi.org/10.3102/0013189X12463051

Hatton, E. (1989). Levi-Strauss’s Bricolage and Theorizing Teachers’ Work. Anthropology and Education Quarterly, 20(2), 74–96.

Johnson, L., Adams Becker, S., Estrada, V., & Freeman, A. (2014). NMC Horizon Report: 2014 Higher Education Edition (No. 9780989733557). Austin, Texas. Retrieved from http://www.nmc.org/publications/2014-horizon-report-higher-ed

Jones, D., & Clark, D. (2014). Breaking BAD to bridge the reality/rhetoric chasm. In B. Hegarty, J. McDonald, & S. Loke (Eds.), Rhetoric and Reality: Critical perspectives on educational technology. Proceedings ascilite Dunedin 2014 (pp. 262–272).

Kay, A., & Goldberg, A. (1977). Personal Dynamic Media. Computer, 10(3), 31–41.

Ko, A. J., Abraham, R., Beckwith, L., Blackwell, A., Burnett, M., Erwig, M., … Wiedenbeck, S. (2011). The State of the Art in End-user Software Engineering. ACM Comput. Surv., 43(3), 21:1–21:44. https://doi.org/10.1145/1922649.1922658

Kruse, A., & Pongsajapan, R. (2012). Student-Centered Learning Analytics (CNDLS Thought Papers). Georgetown University. Retrieved from https://cndls.georgetown.edu/m/documents/thoughtpaper-krusepongsajapan.pdf

Levi-Strauss, C. (1966). The Savage Mind. Weidenfeld and Nicolson.

Liu, D. Y.-T. (2017). What do Academics really want out of Learning Analytics? – ASCILITE TELall Blog. Retrieved August 27, 2017, from http://blog.ascilite.org/what-academics-really-want-out-of-learning-analytics/

Liu, D. Y.-T., Bartimote-Aufflick, K., Pardo, A., & Bridgeman, A. J. (2017). Data-Driven Personalization of Student Learning Support in Higher Education. In A. Peña-Ayala (Ed.), Learning Analytics: Fundaments, Applications, and Trends (pp. 143–169). Springer International Publishing. https://doi.org/10.1007/978-3-319-52977-6_5

Lonn, S., Aguilar, S., & Teasley, S. D. (2013). Issues, Challenges, and Lessons Learned when Scaling Up a Learning Analytics Intervention. In Proceedings of the Third International Conference on Learning Analytics and Knowledge (pp. 235–239). New York, NY, USA: ACM. https://doi.org/10.1145/2460296.2460343

MacLean, A., Carter, K., Lövstrand, L., & Moran, T. (1990). User-tailorable Systems: Pressing the Issues with Buttons. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 175–182). New York, NY, USA: ACM. https://doi.org/10.1145/97243.97271

Mishra, P., & Koehler, M. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. Teachers College Record, 108(6), 1017–1054.

Norman, D. A. (1993). Things that make us smart: defending human attributes in the age of the machine. Cambridge, Mass: Perseus.

Repenning, A., Webb, D., & Ioannidou, A. (2010). Scalable Game Design and the Development of a Checklist for Getting Computational Thinking into Public Schools. In Proceedings of the 41st ACM Technical Symposium on Computer Science Education (pp. 265–269). New York, NY, USA: ACM. https://doi.org/10.1145/1734263.1734357

Scanlon, E., Sharples, M., Fenton-O’Creevy, M., Fleck, J., Cooban, C., Ferguson, R., … Waterhouse, P. (2013). Beyond prototypes: Enabling innovation in technology‐enhanced learning. London. Retrieved from http://tel.ioe.ac.uk/wpcontent/%0Duploads/2013/11/BeyondPrototypes.pdf

Sinha, R., & Sudhish, P. S. (2016). A principled approach to reproducible research: a comparative review towards scientific integrity in computational research. In 2016 IEEE International Symposium on Ethics in Engineering, Science and Technology (ETHICS) (pp. 1–9). https://doi.org/10.1109/ETHICS.2016.7560050

Wiley, D. (n.d.). The Reusability Paradox. Retrieved from http://cnx.org/content/m11898/latest/

Wiliam, D. (2006). Assessment: Learning communities can use it to engineer a bridge connecting teaching and learning. JSD, 27(1).

Yoo, Y., Boland, R. J., Lyytinen, K., & Majchrzak, A. (2012). Organizing for Innovation in the Digitized World. Organization Science, 23(5), 1398–1408.

Zittrain, J. L. (2006). The Generative Internet. Harvard Law Review, 119(7), 1974–2040.